Explainable Artificial Intelligence (XAI): Bridging Transparency and Trust in Modern AI Systems

Abstract

As artificial intelligence (AI) systems continue to shape modern society, the demand for explainable, interpretable, and trustworthy systems has become critical. Explainable Artificial Intelligence (XAI) provides a framework for understanding and interpreting model behavior, ensuring that complex algorithms remain transparent, accountable, and human-centric. This paper explores the principles, mathematical formulations, visualization techniques, and evaluation metrics of XAI, along with real-world applications and future research directions.

Introduction

AI systems have achieved superhuman accuracy in areas such as image recognition, natural language understanding, and predictive analytics. However, their inner workings often remain opaque-a phenomenon termed the 'black-box problem.' This lack of transparency hinders adoption in safety-critical sectors where understanding why a model made a decision is as important as the decision itself. Explainable AI seeks to address this by providing mechanisms that allow users to interpret, trust, and challenge AI predictions. Transparency builds accountability, fosters user confidence, and ensures regulatory compliance.

Theoretical Foundations of Explainability

Explainable AI (XAI) operates through two primary approaches to unveil the decision-making processes of artificial intelligence.

The trade-off between accuracy and interpretability can be expressed as:

•Objective = λ₁ × Accuracy(f) + λ₂ × Explainability(f)A general explainability function E(f) can be defined as:

•E(f) = αC(f) + βI(f)

Explainability Techniques

The SHAP contribution for a feature i is given by: φᵢ = Σ (|S|!(|N|-|S|-1)!)/|N|! × f(S ∪ {i}) - f(S)

- •LIME – Local Interpretable Model-agnostic Explanations: builds local surrogates.

- •SHAP – SHapley Additive exPlanations: uses game theory.

- •Grad-CAM – visualizes CNN activation.

- •Integrated Gradients – computes feature attribution through gradient integration.

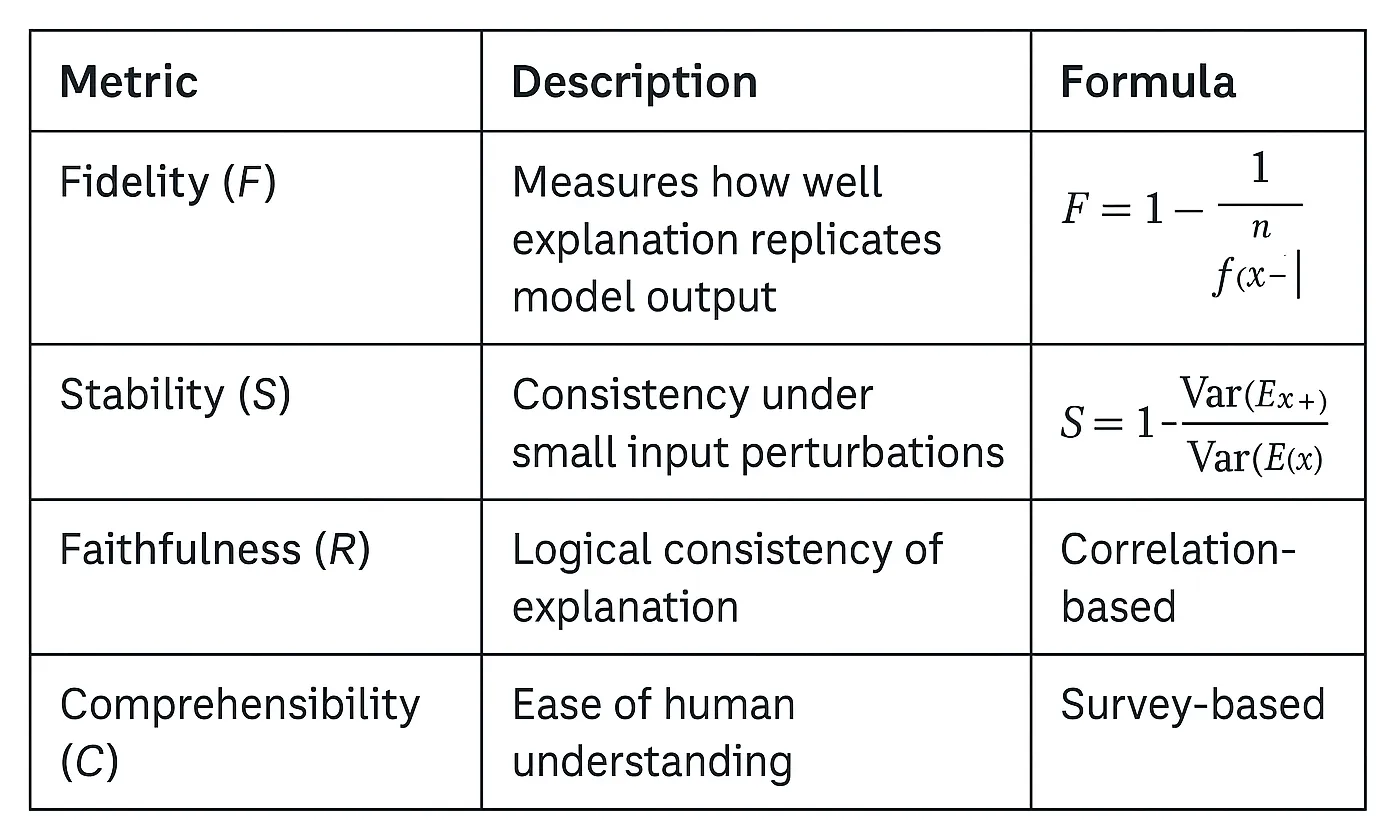

Evaluation Metrics

The above metrics assess the reliability, consistency, and comprehensibility of explanation methods, helping determine how effectively an AI model communicates its reasoning process.

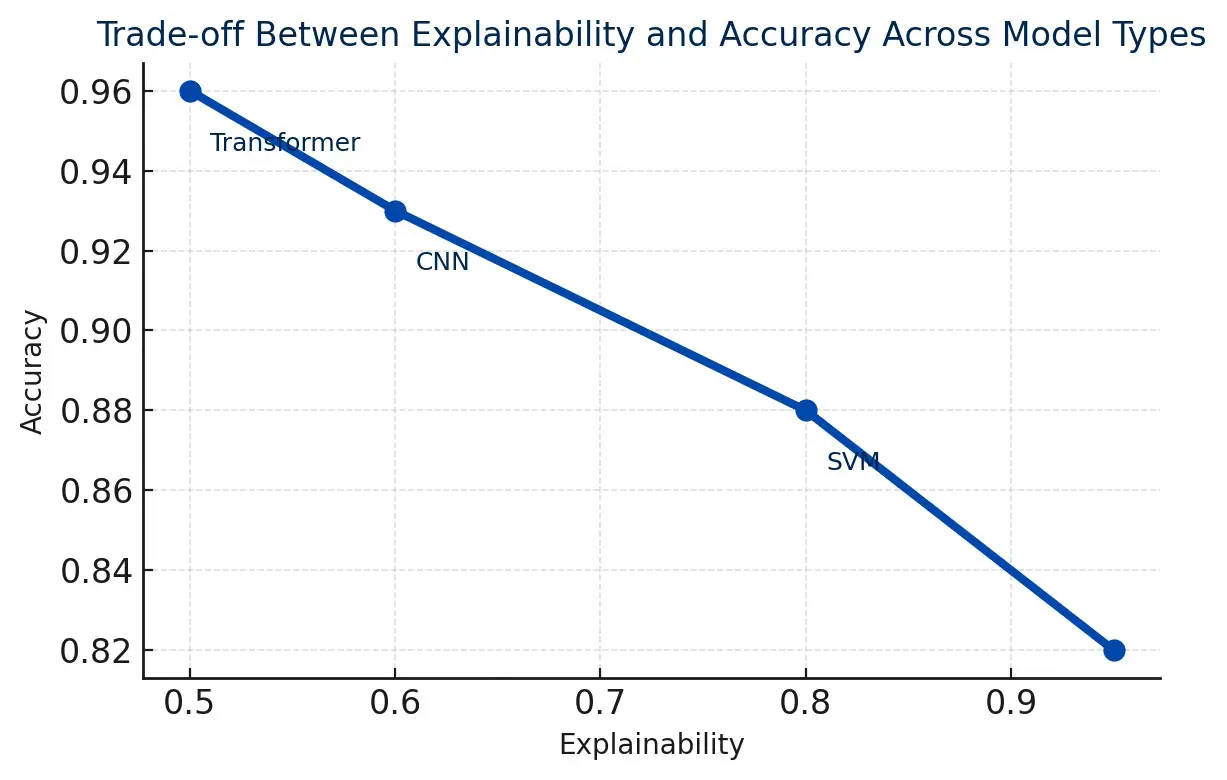

Visual Analysis of Explainability–Accuracy Trade-off

This section highlights the balance between model interpretability and accuracy. The visual below demonstrates how different AI model families trade off transparency for performance.

Simpler models such as Decision Trees are highly explainable but moderately accurate, while complex architectures like Transformers achieve superior accuracy at the cost of transparency.

This trade-off represents one of the most fundamental challenges in Explainable AI - achieving both interpretability and precision in modern models.

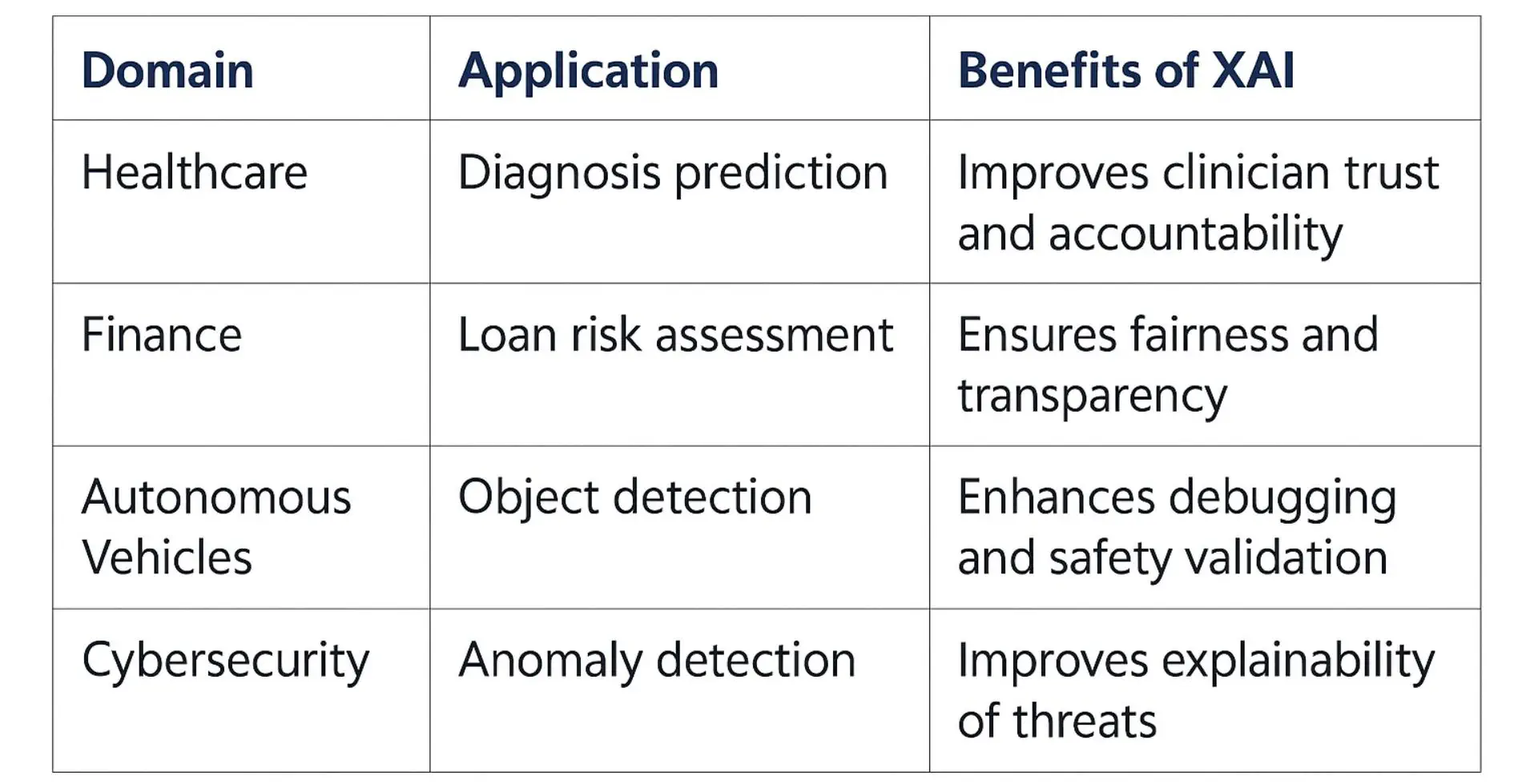

Applications of Explainable AI

Explainable AI (XAI) plays a crucial role in making complex AI models more transparent and interpretable. It helps users understand how decisions are made, ensuring accountability and trust across various sectors. The table below highlights key domains where XAI is applied and the benefits it provides in each context.

Advanced Mathematical Models

Consistency = (1/n) Σ (Rank_SHAP(xᵢ) + Rank_IG(xᵢ)) / 2

Higher consistency implies stronger interpretability and alignment between multiple explanation techniques.

Future Research Directions

1. Causal Explainability – linking model outputs to true causal variables.

2. Interactive Visualization – building adaptive explanations.

3. LLM Explainability – making transformer reasoning visible.

4. Ethical AI – integrating fairness and interpretability.

Conclusion

Explainable AI forms the cornerstone of Trustworthy AI by enabling models to justify their actions. As AI systems grow more autonomous, transparency becomes indispensable for human–AI collaboration and ethical deployment. Balancing performance, fairness, and interpretability will define the next frontier of responsible AI research.

References

- Ribeiro, M. T., Singh, S., & Guestrin, C. (2016, August). ' Why should i trust you?' Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining (pp. 1135-1144).[dl.acm]

- Lundberg, S. M., & Lee, S. I. (2017). A unified approach to interpreting model predictions. Advances in neural information processing systems, 30.[neurips]

- Doshi-Velez, F., & Kim, B. (2017). Towards a rigorous science of interpretable machine learning. arXiv preprint arXiv:1702.08608.[arxiv]

- Gilpin, L. H., Bau, D., Yuan, B. Z., Bajwa, A., Specter, M., & Kagal, L. (2018, October). Explaining explanations: An overview of interpretability of machine learning. In 2018 IEEE 5th International Conference on data science and advanced analytics (DSAA) (pp. 80-89). IEEE.[arxiv]

- Adadi, A., & Berrada, M. (2018). Peeking inside the black-box: a survey on explainable artificial intelligence (XAI). IEEE access, 6, 52138-52160.[ieee]