Natural Language Processing in the Transformer Era: Methods, Evaluation, and Responsible Deployment

Abstract

Natural Language Processing (NLP) has undergone rapid advances during the past decade, transitioning from feature-engineered pipelines and statistical models to neural approaches dominated by the Transformer architecture. This paper surveys foundational linguistics, data and tokenization strategies, core tasks (classification, sequence labeling, sequence-to-sequence, and generative dialogue), and the model evolution from RNN/CNN to attention-based architectures. We detail pretraining objectives (masked-language modeling, next-token prediction), instruction tuning, preference optimization (e.g., RLHF and related methods), parameter-efficient finetuning (adapters, LoRA, QLoRA), and emerging retrieval-augmented generation (RAG) systems that blend parametric and non-parametric memory. We review evaluation: classic task metrics (accuracy, F1), MT/NLG metrics (BLEU, ROUGE, BERTScore), and holistic, scenario-based frameworks such as GLUE, SuperGLUE, BIG-bench, and HELM. Efficiency topics include quantization, sparse Mixture-of-Experts (MoE), and inference optimizations for production. We analyze robustness, privacy, and safety-covering data governance, differential privacy, model alignment, toxicity/misinformation, and evaluation of social harms. Finally, we outline practical deployment patterns (observability, grounding with RAG, prompt and policy layers) and discuss open problems and research opportunities in controllability, multimodality, long-context reasoning, and low-resource NLP. Throughout, we propose concrete image/diagram prompts suitable for technical illustration.

Introduction

Natural Language Processing (NLP) seeks to enable computers to understand, generate, and interact using human language. From early rule-based systems to statistical machine learning, and now to large-scale neural language models, the field has continually traded handcrafted features for learned representations. The pivotal inflection came in 2017 with the Transformer architecture, which replaced recurrence and convolutions with attention mechanisms and unlocked highly parallel training. This design improved translation quality, reduced training time, and provided a flexible backbone for most modern NLP systems. The Transformer’s encoder–decoder (and purely decoder) variants underlie BERT (bidirectional masked modeling), T5 (text-to-text), and GPT-style autoregressive models, which together catalyzed transfer learning in NLP. These pretraining-then-adaptation regimes-enhanced by instruction tuning and preference optimization-elevated zero/one/few-shot generalization and enabled general-purpose language agents. At the same time, the productionization of NLP has forced practitioners to grapple with robustness, safety, privacy, energy efficiency, and governance. Organizations now deploy NLP systems into high-stakes contexts (healthcare, finance, legal discovery, public services), where reliability, controllability, and accountability matter as much as benchmark performance. This paper synthesizes methods, systems, and evaluation practices that guide the development of modern NLP applications, and it highlights the open problems that are most urgent for research and industry.

Linguistic and Data Foundations

Languages exhibit phonology, morphology, syntax, semantics, and pragmatics. While large language models (LLMs) do not encode explicit grammars, they implicitly learn many regularities from data. Still, explicit linguistic analysis remains useful for dataset design, error analysis, and evaluation of generalization across domains and dialects. Corpus construction involves coverage (topic, domain), balance (class ratios), representativeness (register, genre), and legality (licensing, privacy). Data governance has become a first-class concern: sourcing, redaction, consent management, and auditability are as important as model accuracy. For multilingual applications, curators must reconcile script variations, morphological richness, code-switching, and orthographic differences.

Tokenization and Subword Units

Tokenization bridges raw text and model inputs. Fixed-vocabulary word models struggle with rich morphology and rare words. Subword segmentation-Byte Pair Encoding (BPE), WordPiece, and SentencePiece-tackles open-vocabulary issues and supports multilinguality. BPE merges frequent character n-grams; SentencePiece trains directly on raw text and can operate at the byte level, producing language-independent subwords. Subword tokenization reduces out-of-vocabulary (OOV) failures, stabilizes training, and enables efficient sharing of morphemes across languages, though it can also fragment named entities or lose token boundaries that are semantically meaningful in certain scripts. Recent models experiment with character-level or byte-level tokenization to simplify pipelines and handle arbitrary inputs.

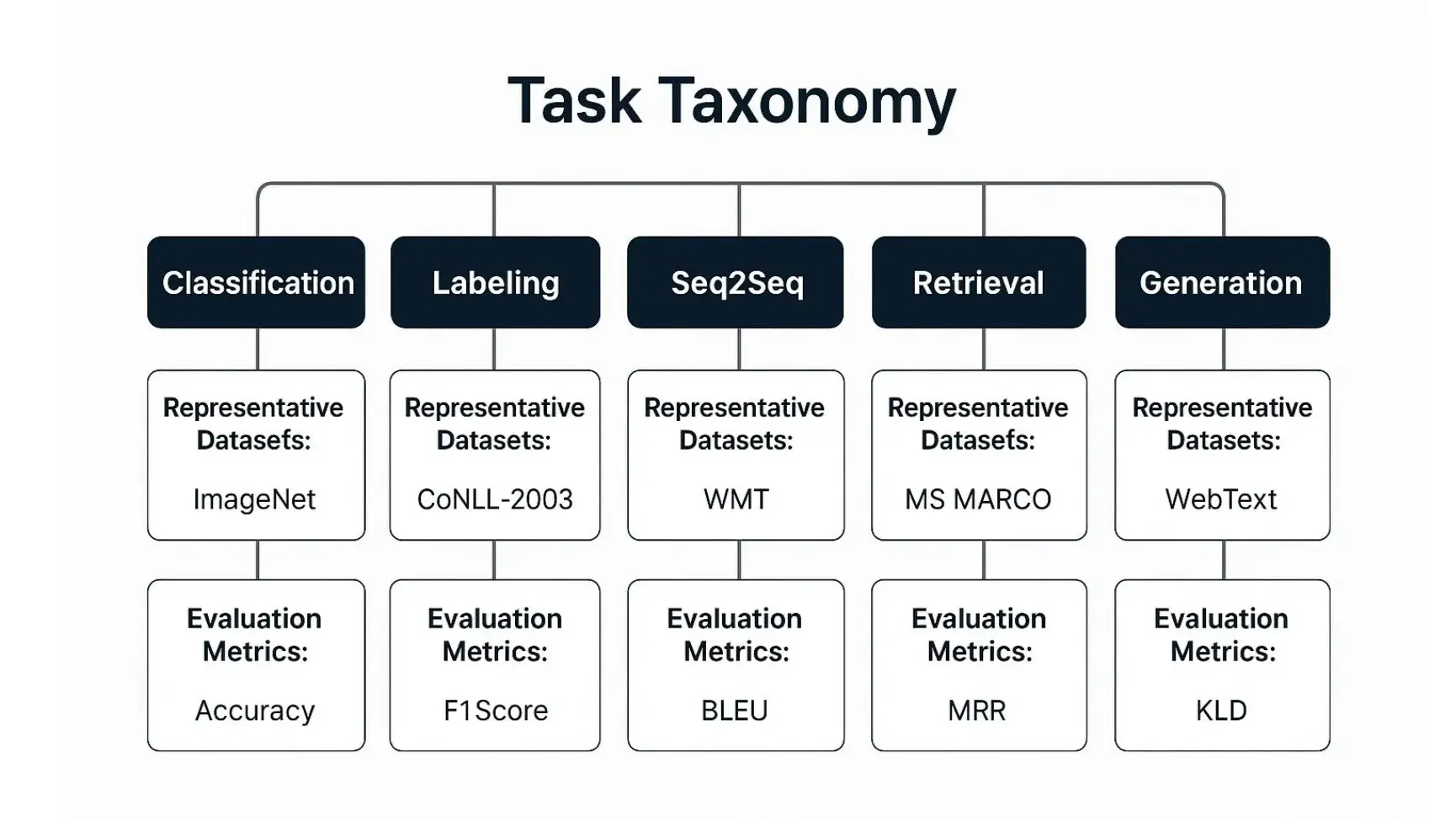

Core Problem Settings in NLP

NLP spans a spectrum of tasks with different objective structures and evaluation criteria. Classification includes sentiment analysis, topic labeling, toxicity detection, and intent detection. Sequence labeling covers part-of-speech tagging, chunking, and named entity recognition (NER). Pairwise reasoning tasks include natural language inference (NLI), paraphrase identification, semantic textual similarity, and retrieval scoring. Sequence-to-sequence (seq2seq) generation drives machine translation, abstractive summarization, and data-to-text generation. Open-ended generation powers dialogue agents, creative writing, and code generation. Information-seeking tasks include question answering (extractive and generative) and retrieval-augmented workflows that ground responses in external corpora. Benchmarks such as GLUE and SuperGLUE collect diverse NLU tasks, while BIG-bench probes long-tail reasoning and emergent capabilities; HELM organizes holistic, multi-metric evaluation across scenarios, safety, and calibration.

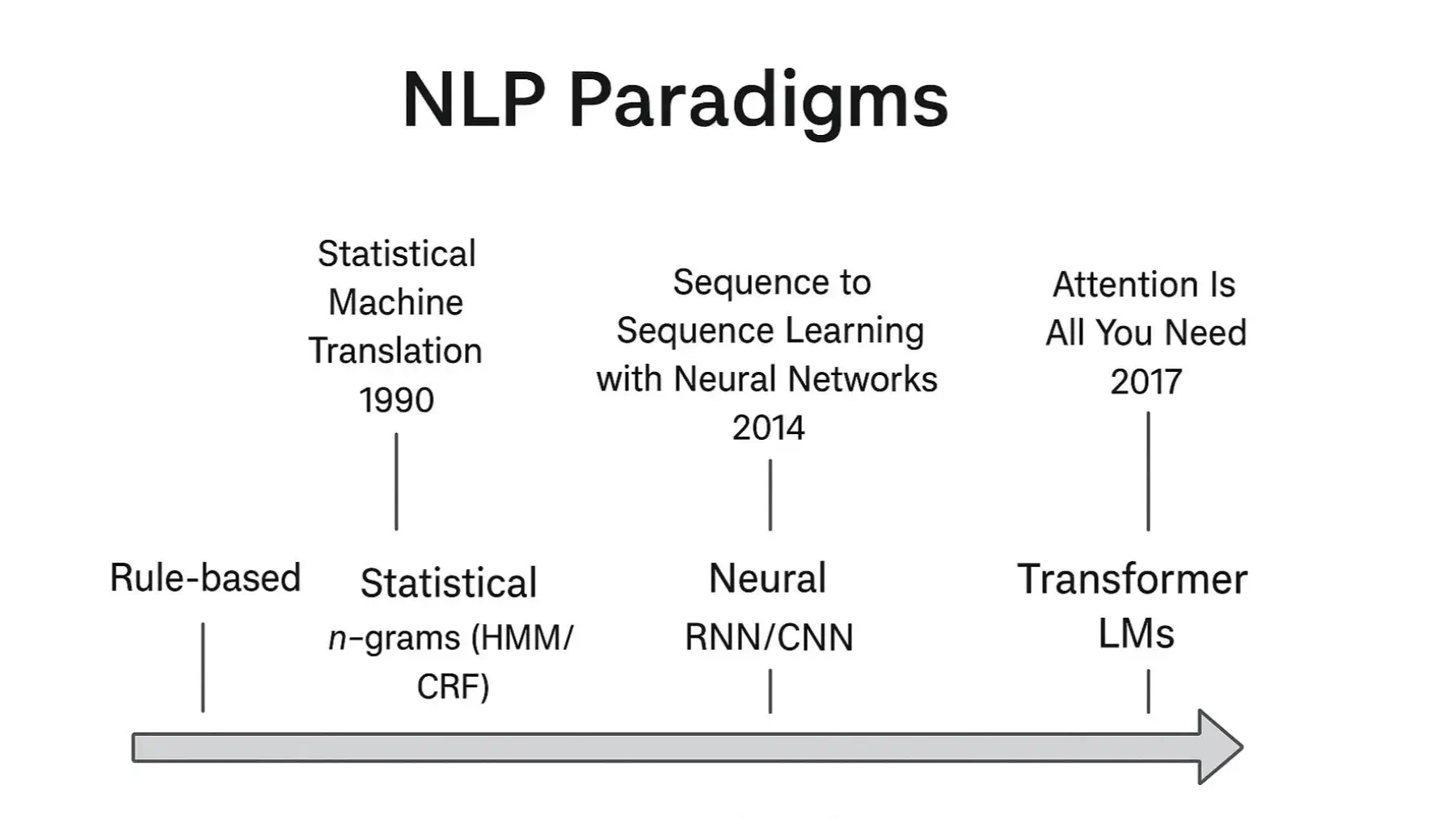

Modeling Evolution

Before Transformers, statistical NLP relied on n-gram language models, maximum entropy classifiers, and graphical models such as HMMs and CRFs. Neural encoders (CNN/RNN/LSTM/GRU) improved distributed representations but had limitations in long-range dependency modeling and parallelism. Attention mechanisms reduced reliance on fixed-size hidden states, enabling better alignment in machine translation and other seq2seq tasks. The Transformer architecture generalized attention and replaced recurrence entirely, unlocking scalable parallel training and becoming the de facto backbone for modern NLP. Encoder-only models (e.g., BERT) excel at comprehension and classification; decoder-only models (GPT-style) excel at generation; encoder-decoder models (T5/BART) unify seq2seq tasks under a text-to-text framework.

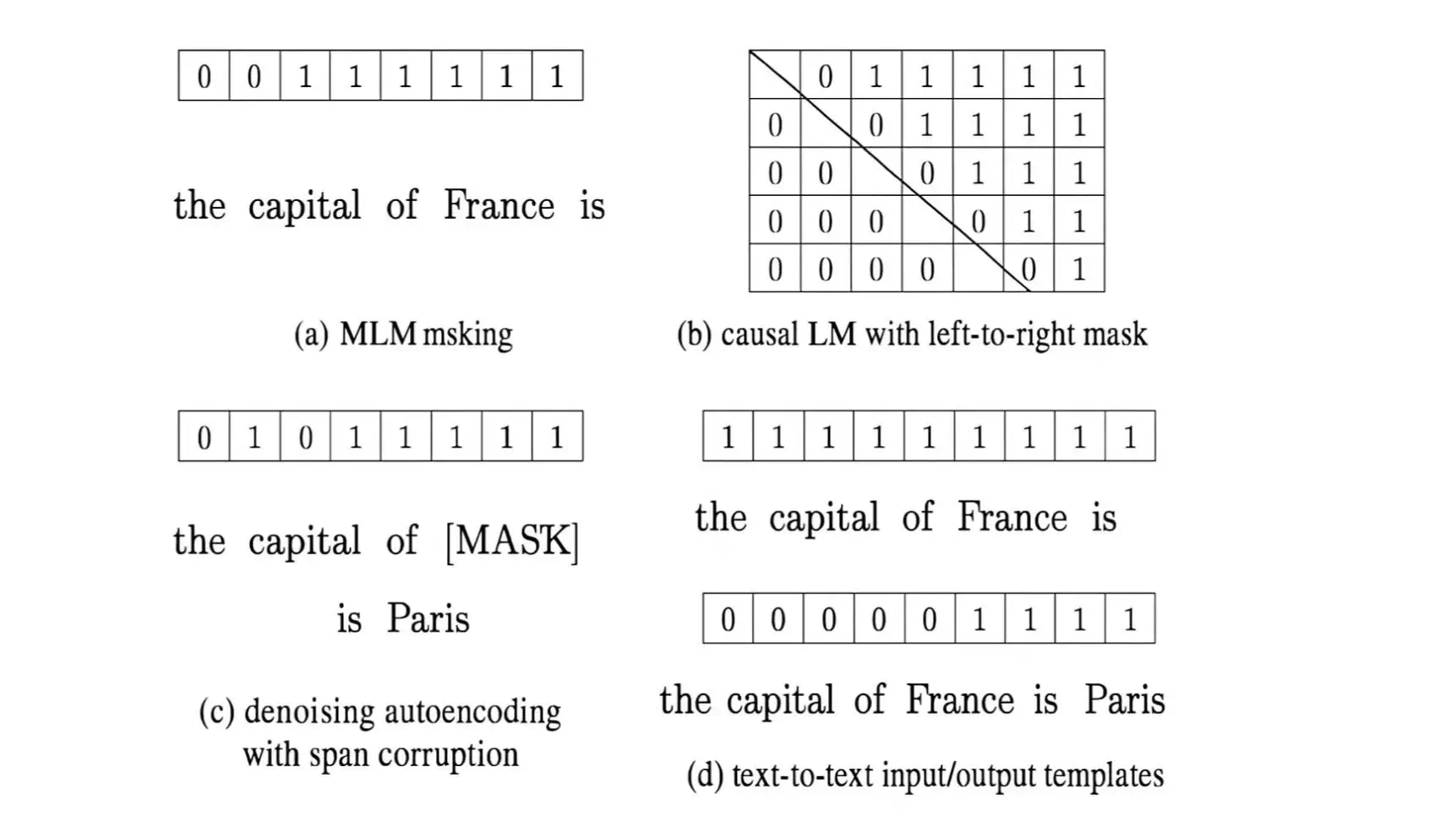

Pretraining Objectives and Adaptation

Pretraining on large unlabeled corpora is the central recipe of modern NLP. Masked Language Modeling (MLM) as popularized by BERT trains bidirectional representations by masking tokens and predicting them from context. Variants drop next-sentence prediction or use more robust sampling. Autoregressive next-token prediction (causal LM) trains models to predict each token conditioned on preceding tokens; such models exhibit strong few-shot and in-context learning properties, with performance scaling predictably with parameter count and data size. Text-to-text framing, introduced by T5, casts diverse tasks into a unified format with task-specific prompts, enabling a single model to solve translation, summarization, and classification by changing input templates. Adaptation strategies include supervised finetuning on labeled datasets, instruction tuning on curated prompt–response pairs, and preference-based optimization such as Reinforcement Learning from Human Feedback (RLHF). Each stage nudges the base model toward helpfulness, honesty, and harmlessness while balancing creativity and faithfulness to sources.

Instruction Tuning and Preference Optimization

Instruction tuning uses datasets of natural-language instructions paired with desired outputs to align models to follow human directives. It often improves zero-shot performance on user prompts that differ from pretraining distributions. Building on instruction tuning, RLHF collects human preference comparisons between pairs of model outputs to train a reward model; the policy (language model) is then optimized to maximize the learned reward subject to constraints (e.g., KL penalties to remain close to the supervised baseline). Alternatives to RLHF include Direct Preference Optimization and other implicit preference objectives that reduce training complexity while retaining alignment benefits. In all cases, the quality and diversity of preference data are crucial: narrow or biased preference sets can entrench model biases, while high-quality annotation protocols and rater training can improve safety and helpfulness outcomes.

Parameter-Efficient Finetuning (PEFT) and Quantization

Full finetuning of large models is expensive in memory and compute and risks catastrophic forgetting. Parameter-Efficient Finetuning (PEFT) methods-adapters, prefix/prompt tuning, and Low-Rank Adaptation (LoRA)-allow practitioners to freeze the base model and train a small number of additional parameters to specialize the model for a domain. LoRA injects low-rank matrices into attention and MLP layers, dramatically reducing trainable parameters while preserving quality. Quantization further compresses models by representing weights and activations with lower precision (e.g., 8-bit or 4-bit). QLoRA combines 4-bit quantization of the base model with LoRA adapters, enabling single-GPU finetuning for models that would otherwise be infeasible to adapt. These techniques have democratized domain adaptation and allowed organizations to keep models close to on-prem data, reducing privacy risks and operational costs.

Retrieval-Augmented Generation (RAG)

Parametric language models encode facts in their weights, making updates slow and provenance opaque. Retrieval-Augmented Generation (RAG) addresses these limitations by conditioning generation on passages retrieved from an external corpus. A typical pipeline encodes the user query with a dense retriever (e.g., dual-encoder/DPR), performs approximate nearest-neighbor search in a vector index (e.g., FAISS, ScaNN), and provides the top-k passages to the generator. RAG-Sequence conditions the entire response on a fixed retrieved set, while RAG-Token allows the model to attend to different passages at different decoding steps. RAG improves factual grounding, enables citations, and allows near real-time updates by refreshing the underlying index, though it introduces new challenges in retrieval quality, latency, and prompt budgeting.

Scaling and Sparse Mixture-of-Experts (MoE)

Empirically, larger models trained on more data generally perform better and exhibit stronger in-context learning. However, dense scaling is computationally expensive. Sparse Mixture-of-Experts (MoE) architectures activate only a subset of expert sub-networks per token, increasing parameter counts without proportionally increasing compute for each forward pass. Switch Transformers simplified routing to a single active expert per layer, enabling trillion-parameter models. Recent open models (e.g., Mixtral 8×7B) demonstrate competitive performance at favorable latency–quality tradeoffs. Despite their promise, MoE systems require careful routing stabilization, load-balancing losses, and engineering for distributed training, and they complicate inference caching and serving.

Evaluation

Evaluation in NLP encompasses intrinsic metrics, task-specific scores, and holistic frameworks. For classification and sequence labeling, accuracy and F1 remain standard. In machine translation, BLEU measures modified n-gram precision with a brevity penalty; while useful, it can be insensitive to semantic adequacy and style. Summarization commonly uses ROUGE (n-gram overlap and longest common subsequence), though these lexical metrics can reward extractive copying over abstractive content selection. Embedding-based metrics such as BERTScore compute similarity in contextual representation space and often correlate better with human judgments for generation tasks. Benchmark suites elevate comparability: GLUE and SuperGLUE aggregate diverse NLU tasks with standardized splits and leaderboards; BIG-bench assesses emergent abilities across many contributors’ tasks; HELM takes a scenario-driven perspective, reporting multiple metrics (accuracy, calibration, robustness, fairness, and toxicity) to encourage multi-dimensional model assessment.

Robustness, Privacy, and Safety

Deployed NLP systems face distribution shift, adversarial prompts, prompt injection, and jailbreaking attempts. Defense-in-depth combines input validation, retrieval grounding, output filtering, structured decoding, and continuous red-teaming. Privacy concerns arise when models memorize training data: differential privacy bounds information leakage by adding noise during training, whereas techniques like PATE transfer knowledge from private teacher ensembles to a student model without exposing sensitive examples. Governance frameworks emphasize consented data use, data minimization, auditable pipelines, and incident response. Ethical risk taxonomies catalog harms including discrimination, toxicity, privacy leakage, misinformation, dual-use misuse, and environmental costs; mitigations include dataset curation, policy-tuned decoding, refusal mechanisms, transparency reports, and energy-aware training and serving. Importantly, safety evaluations must reflect local context and regulatory requirements, and should be repeated as models drift or are adapted to new domains.

Productionization Patterns

Modern NLP systems are more than a single model; they are pipelines with guardrails, retrieval layers, orchestrators, and observability. A typical enterprise architecture comprises: (1) a policy and prompt layer that validates inputs, enforces safety and compliance, and injects system instructions; (2) a retrieval layer (vector database + classical keyword search) that grounds responses on internal sources and emits citations; (3) a reasoning and generation layer that selects tools (retrievers, calculators, code runners), composes plans, and generates outputs; (4) post-processing that normalizes citations, redacts sensitive fields, and formats answers; and (5) logging and analytics that drive A/B testing, offline evaluations, rater review, and incremental improvements. For cost and latency, teams use quantization, efficient batching, KV-cache reuse, speculative decoding, and dynamic routing across model sizes. For adaptability, PEFT methods (LoRA/QLoRA) let teams ship domain updates quickly without retraining from scratch. Observability includes quality dashboards, safety incident trackers, drift monitors for retrieval corpora, and feedback channels for end-users and subject-matter experts.

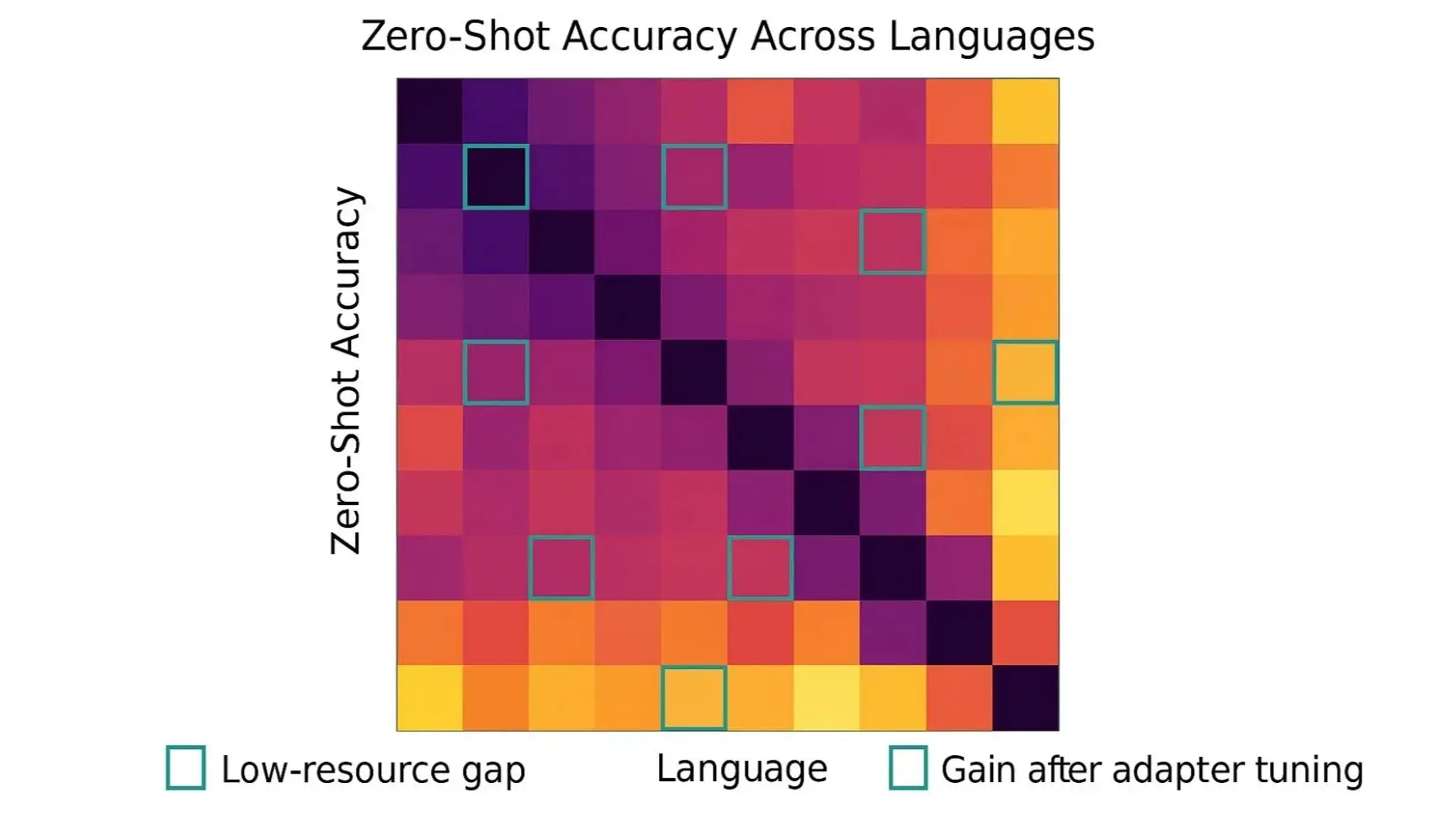

Multilingual and Low-Resource NLP

Multilingual NLP confronts typological diversity, script variation, and data scarcity. Subword and byte-level tokenization improves coverage, but careful data balancing and contamination control remain crucial. Cross-lingual transfer lets models trained on high-resource languages serve low-resource targets, and mixture-of-experts can allocate capacity to language clusters. Parameter-efficient adapters allow per-language specialization without duplicating full model weights. Despite progress, many dialects and minority languages lack evaluation datasets, which underscores the need for participatory data creation, governance aligned with local norms, and equitable resource allocation. A practical recipe for multilingual deployments includes: (a) domain-appropriate corpora with legal clearance; (b) tokenization audits for script coverage; (c) baseline evaluation with human raters fluent in the target language; (d) LoRA adapters for domain terms; and (e) continual retrieval index updates to reflect evolving terminology and regulations.

Case Studies (Illustrative Templates)

The following case-study templates can be instantiated with domain-specific details from your organization. Replace the placeholders with your data sources, ontologies, and KPIs to generate internal documentation or whitepapers tailored to each business unit.

RAG for Enterprise Knowledge:

Objective: Provide grounded answers with citations from an enterprise corpus (policies, handbooks, product specs). Pipeline: Ingest documents; chunk with structure-aware heuristics; embed with a multilingual encoder; store in a vector DB with metadata filters; hybrid search combining BM25 and dense retrieval; prompt templates that reserve context budget for citations; answer synthesis with attributions; fallbacks for low-confidence cases. Evaluation: Offline retrieval precision/recall; QA-based factuality checks; human rater calibration; production telemetry on citation click-through; A/B experiments comparing prompt variants. Risks: stale documents, overconfident generation when retrieval fails, privacy exposure in logs; mitigations include index freshness SLOs, guarded refusal behaviors, and data minimization.

Summarization at Scale:

Objective: Generate executive summaries of long documents (reports, transcripts) that preserve key facts, decisions, and action items. Pipeline: structure-aware chunking (headings, sections); encoder–decoder or instruction-tuned decoder with long-context strategies; topic-guided prompting to cover essential sections; constraint templates for numbers/dates; optional RAG for evidence citations. Evaluation: ROUGE, BERTScore, question-answering probes for factual consistency, and human evaluations focusing on coverage and faithfulness. Risks: hallucinated numbers or names; mitigations include constrained decoding for numerals, post-hoc fact checking against source passages, and human-in-the-loop for critical documents.

Machine Translation Modernization:

Objective: Replace legacy SMT with Transformer-based MT tailored to brand style and domain terminology. Pipeline: parallel corpus cleanup; terminology extraction; base MT model finetuned with LoRA adapters; in-domain validation with human translators; post-editing workflows integrated with CAT tools. Evaluation: BLEU and COMET; targeted linguistic QA for formality, tone, and brand compliance. Risks: domain drift, low-resource language gaps; mitigations include active learning to prioritize new segments and adapter updates per domain.

Open Problems and Research Directions

Controllability and Safety: Stronger preference learning, policy-aware decoding, and transparent compliance checks remain active areas. Factuality and Attribution: Combining symbolic reasoning, retrieval, and calibrated uncertainty to reduce hallucinations. Long Context and Memory: Efficient attention (linear, grouped-query, and recurrent variants), external memory, and retrieval-augmented summarization for multi-document reasoning. Low-Resource and Inclusive NLP: Data creation with local communities, better cross-lingual transfer, and fair evaluation across dialects and writing systems. Efficiency and Sustainability: Beyond 4-bit quantization, structured sparsity, speculative decoding with verifiable approximations, and robust MoE routing for production serving. Multimodality: Joint language–vision–audio models that ground text in perceptual inputs, enabling richer agents for enterprise and assistive applications. Evaluation Reform: Scenario-based, multi-metric, and user-centered evaluation that captures safety, calibration, and social impacts-not just task accuracy-will be critical to responsible progress.

Conclusion

NLP’s transformation in recent years stems from the Transformer architecture and the scaling of pretraining. Instruction tuning and preference optimization make models more helpful and aligned; PEFT and quantization democratize adaptation; and RAG restores provenance and updatability. Evaluation is evolving from single scores to holistic frameworks measuring quality, reliability, and safety. Looking ahead, the field must reconcile powerful generalization with controllability, factuality, and equitable coverage across languages and domains, while delivering efficient, privacy-preserving, and robust systems. For practitioners, the pragmatic path involves: (i) grounding outputs with retrieval, (ii) adopting PEFT and quantization for efficiency, (iii) instituting rigorous governance for data and safety, and (iv) continuously evaluating with user-centered metrics.

References

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., ... & Polosukhin, I. (2017). Attention is all you need. Advances in neural information processing systems, 30.[neurips]

- Devlin, J., Chang, M. W., Lee, K., & Toutanova, K. (2019, June). Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 conference of the North American chapter of the association for computational linguistics: human language technologies, volume 1 (long and short papers) (pp. 4171-4186).[aclanthology]

- Raffel, C., Shazeer, N., Roberts, A., Lee, K., Narang, S., Matena, M., ... & Liu, P. J. (2020). Exploring the limits of transfer learning with a unified text-to-text transformer. Journal of machine learning research, 21(140), 1-67.[jmlr]

- Brown, T., Mann, B., Ryder, N., Subbiah, M., Kaplan, J. D., Dhariwal, P., ... & Amodei, D. (2020). Language models are few-shot learners. Advances in neural information processing systems, 33, 1877-1901.[neurips]

- Liu, Z., Lin, W., Shi, Y., & Zhao, J. (2021, August). A robustly optimized BERT pre-training approach with post-training. In China national conference on Chinese computational linguistics (pp. 471-484). Cham: Springer International Publishing.[aclanthology]