Introduction: Why Choosing the Right System Matters More Than Ever

Most companies start looking for an AI visual inspection system when manual inspection can no longer keep up. Defects slip through, inspection teams struggle with speed, and quality issues are discovered too late to fix cheaply. At that point, choosing the wrong system is not a small setback it becomes an operational risk that affects cost, output, and internal confidence.

Companies choose AI visual inspection systems to improve inspection accuracy and consistency, but poor automated inspection system selection often leads to higher costs, delayed deployment, and limited value in real manufacturing operations. This usually happens when systems are evaluated in demos instead of real production conditions.

In practice, we have seen quality control automation slow down instead of improving workflows because lighting changes, product variation, or integration complexity were not considered early. These mistakes are common across industrial environments and are difficult to reverse once deployment begins. This guide focuses on how companies can avoid those traps and choose a system that works on the factory floor not just on slides.

Why Many Companies Struggle to Choose the Right Inspection System

Most companies don’t struggle because inspection systems are complex. They struggle because every vendor sounds the same. Accuracy numbers look impressive, dashboards look clean, and demos run perfectly. But none of that shows how the system behaves once it is placed next to a noisy production line.

Vendor claims are a big part of the problem. We’ve seen teams choose systems based on demo videos, only to realize later that lighting changes or surface reflections break detection accuracy. These issues don’t appear in sales meetings. They appear at 2 a.m. during a production run.

The fear of choosing wrong is real. Inspection automation touches quality control, production speed, and reporting. Once deployed, backing out is expensive. Many teams delay decisions not because they lack budget, but because no one wants to own a failed rollout.

Manual inspection adds pressure, but also confusion. Everyone knows human inspection is inconsistent, especially at high speeds. At the same time, rushed automation often replaces one problem with another. We’ve seen systems that still needed operators to double check results, making quality control automation slower than before.

Then comes ROI. Management expects quick results. When integration takes longer or defect reduction is gradual, teams are forced to defend the investment instead of improving manufacturing operations. This pattern repeats across industrial environments, and it’s why choosing the right inspection system is harder than most people expect.

What Is an AI Visual Inspection System?

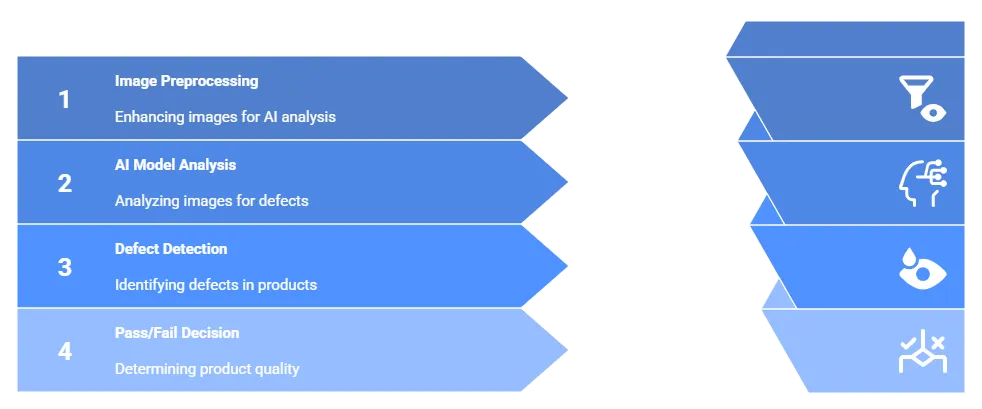

An AI visual inspection system is an automated quality inspection system that uses cameras and trained models to check products in real time, detect defects, and support inspection decisions directly within an industrial inspection workflow, without depending on manual visual checks.

In practice, the system watches the production line continuously. Images are captured, analyzed instantly, and defects are flagged as they occur.

Where many systems fail is not technology, but reality. Lighting changes, surface glare, and speed variations quickly test how reliable the defect detection process is inside manufacturing operations.

When done right, real-time inspection becomes part of normal production, helping teams catch issues early across industrial environments without slowing work down.

When Should Companies Move From Manual to Automated Inspection?

Manual inspection becomes a problem when production speed increases. Inspectors miss defects simply because they cannot look fast enough, especially during long shifts.

Consistency is usually the first warning sign. Results change by operator and time of day, even when the product stays the same.

Scale forces the decision. As production line inspection grows, adding more people increases cost but does not fix variation.

Automation works only when factory automation readiness exists. Without stable processes and clear defect definitions, automation fails just as manual inspection does.

Key Inspection System Evaluation Criteria Companies Should Focus On

Choosing an inspection system is less about features and more about how the system behaves in real manufacturing operations. The right evaluation criteria help avoid costly mistakes that only appear after deployment.

For example, on high speed automotive production lines, inspection accuracy often drops when lighting shifts between day and night. Systems that performed well during trials failed to detect surface defects once reflections changed, forcing teams to slow the line or reintroduce manual checks.

• Inspection Accuracy and Consistency

Accuracy must hold under real conditions. Systems that perform well in demos often struggle with lighting changes, surface glare, or product variation. Consistency matters more than peak accuracy numbers.

• Real-Time Performance on the Production Line

Inspection must keep pace with production. If analysis slows the line or creates delays, the system adds friction instead of value.

• Integration With Existing Workflows

Many failures happen during integration. Inspection systems should fit into existing manufacturing operations without long downtime or complex rework.

• Scalability Across Operations

A system that works on one line may fail at scale. Companies should evaluate whether inspection performance stays stable across shifts, sites, and growing volumes.

Automated Inspection System Requirements to Check Before Selection

Start with hardware. Cameras, lighting, and mounting must handle real factory conditions. Systems often fail because basic hardware is not stable on the production floor. Check software compatibility early. Inspection software should connect easily with existing systems. Many deployments slow down because integration was assumed, not tested.

Maintenance is usually underestimated. Models need updates, and small process changes can affect results. Without a clear maintenance plan, inspection accuracy drops over time. Long term support matters more than initial setup. When issues appear after deployment, slow support turns small problems into operational delays across manufacturing operations.

Inspection Automation Decision Factors That Affect ROI

Cost alone is a poor decision signal. Lower-priced systems often require more manual checks, more tuning, and more downtime, which quietly increases long-term expense.

In multiple manufacturing assessments, teams have seen defect-related rework drop by 20–30% after stabilizing automated inspection on high-volume lines. The gains came from catching defects earlier, not from increasing inspection speed.

Defect reduction is where real value appears. When defects are caught earlier, scrap drops, rework reduces, and downstream issues become easier to control. This impact is often underestimated during evaluation

Operational efficiency matters as much as accuracy. Systems that slow the line or require frequent human intervention reduce ROI, even if detection rates look good on paper.

Long-term savings depend on stability. Reliable inspection systems reduce staffing pressure, improve reporting, and scale better across manufacturing operations, especially in industrial environments with growing production demands.

Common Mistakes Companies Make When Choosing Inspection Automation

The first mistake is trusting demos too much. Demos are staged. Real production is noisy, fast, and unpredictable. Systems that look perfect in demos often break once they face real parts and real speed.

Another mistake is ignoring the shop floor. Lighting shifts, parts vary, operators change things. Many inspection systems are never tested under these conditions before purchase.

Integration is also underestimated. Inspection automation rarely fits cleanly into existing manufacturing operations. When integration drags on, production suffers and teams lose confidence fast.

Cost-focused decisions cause long-term damage. Cheaper systems usually need more tuning, more manual checks, and more support. Over time, they cost more across industrial environments.

Automated Inspection Systems vs Manual Inspection

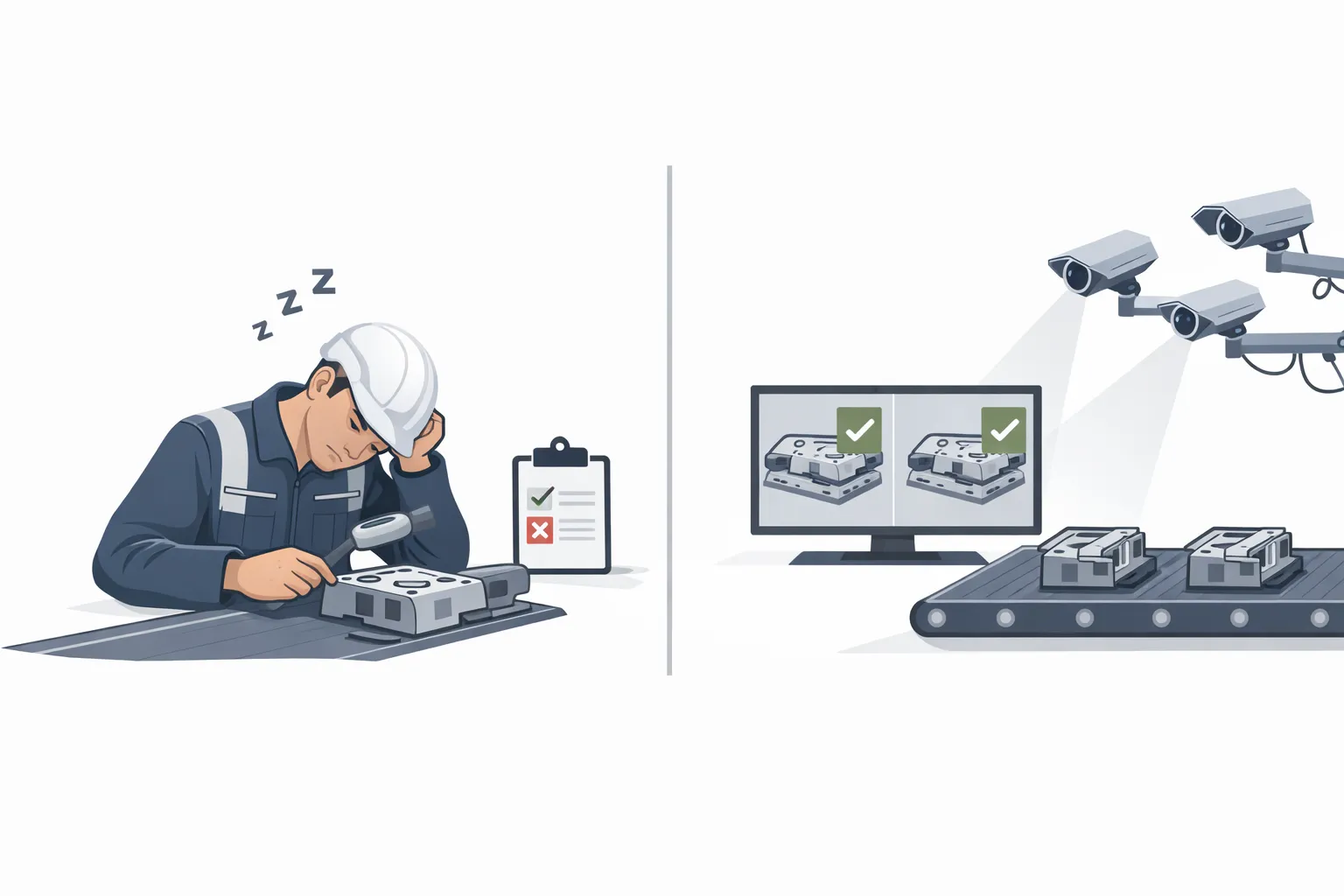

The difference becomes clear once production speed and volume increase. Manual inspection depends on people. Automated inspection depends on systems. Their limits are not the same.

| Factor | Manual Inspection | Automated Inspection Systems |

|---|---|---|

| Speed | Slows down as line speed increases | Matches production line speed |

| Accuracy | Drops with fatigue and repetition | Stable when trained correctly |

| Consistency | Varies by operator and shift | Same result every cycle |

| Scalability | Needs more people to scale | Scales across lines and sites |

| Cost over time | Lower upfront, higher long term | Higher upfront, lower long term |

Manual inspection works at low volumes. It breaks under pressure. Automated inspection systems perform best when production line inspection must stay fast and repeatable across manufacturing operations.

Questions Companies Should Ask Before Finalizing an Inspection System

1. How do we evaluate inspection performance in real conditions?

Test the system on your actual production line, not in a demo setup. Real lighting, real speed, real product variation. If accuracy drops outside controlled conditions, the system is not ready.

2. What accuracy level is acceptable for our use case?

Chasing perfect accuracy is unrealistic. The goal is stable accuracy that holds across shifts and batches. A system that performs consistently at scale is more valuable than one with high lab results.

3. How easily can the system scale across operations?

Ask whether the same setup works across lines, plants, and products. If scaling requires heavy re-training or constant tuning, deployment will slow down manufacturing operations.

4. What support is required after deployment?

Deployment is not the end. Models drift, processes change, and issues appear. If support is slow or unclear, small problems will grow into operational delays across industrial environments.

How Ombrulla Approaches Inspection Automation

Ombrulla works on inspection automation in industrial environments where conditions change daily. Lighting shifts, products vary, and production does not pause for tuning.

The work is focused on enterprise deployment. Systems are expected to run across lines and sites, not just single use cases, and to fit into existing manufacturing operations without disruption.

Inspection automation is treated as part of the workflow. Decisions are made around stability and long-term use, not short-term benchmarks.

Final Checklist for Choosing the Right AI Visual Inspection System

First, check evaluation basics. If the system fails under real production speed, lighting, or variation, don’t move forward. Demos don’t count.

Next, confirm requirements. Hardware stability, software fit, and maintenance effort must be known upfront. Guessing here usually causes delays later.

Then, face the risks. Integration takes longer than expected. Data changes. Models drift. If no one owns these risks, the system will struggle.

Last, check readiness. Automation works only when processes are stable and teams are prepared. Without that, even good inspection systems fail in manufacturing operations.

Frequently Asked Questions

Conclusion: Making a Confident and Informed Decision

Choosing automated inspection systems is a judgment call, not a feature comparison. Most failures happen because teams trust demos instead of real production tests.

Confidence in decision making comes from seeing how a system behaves under speed, variation, and pressure. If it works there, it usually works long term.

Ombrulla approaches inspection automation with that reality in mind, focusing on systems that survive real manufacturing operations.

Learn more when you are ready to evaluate inspection automation without guesswork.